Tame OS — A space to grow ideas.

Set up your OS or learn more↓

What excites us most about AI is not just how it could make humans more intelligent or productive, but also more creative. We think AI can become a truly creative thought-partner, one that helps you come up with ideas you wouldn't or couldn't have had otherwise. And that isn't limited to classical creative disciplines, it applies to any field where new ideas are needed.

No amount of productivity can match the value of a completely new idea that changes the rules entirely. Looking at the development of AI over the past few years, it's only a matter of time before it can generate entirely new and creative ideas. But these ideas only become valuable when put in the right context, which is why we deeply believe in a human-in-the-loop approach. A strong creative partner won’t make your ideas obsolete but it will likely improve them. Figuring out the right interaction paradigms for that moment felt like an exciting challenge.

Tame OS is our experimental space for exploring new use cases and interactions with AI. We didn't want to limit it to a single use case, since our goal is exploration and that might narrow our view of what it could be or where it could be used. Having different explorations under one roof lets us iterate efficiently, because they all share the same technical infrastructure and branding.

Ideas in, ideas out — While many AI apps focus on general assistance, productivity, image generation, or code, we focus on ideas. Tame OS is a space to grow them.

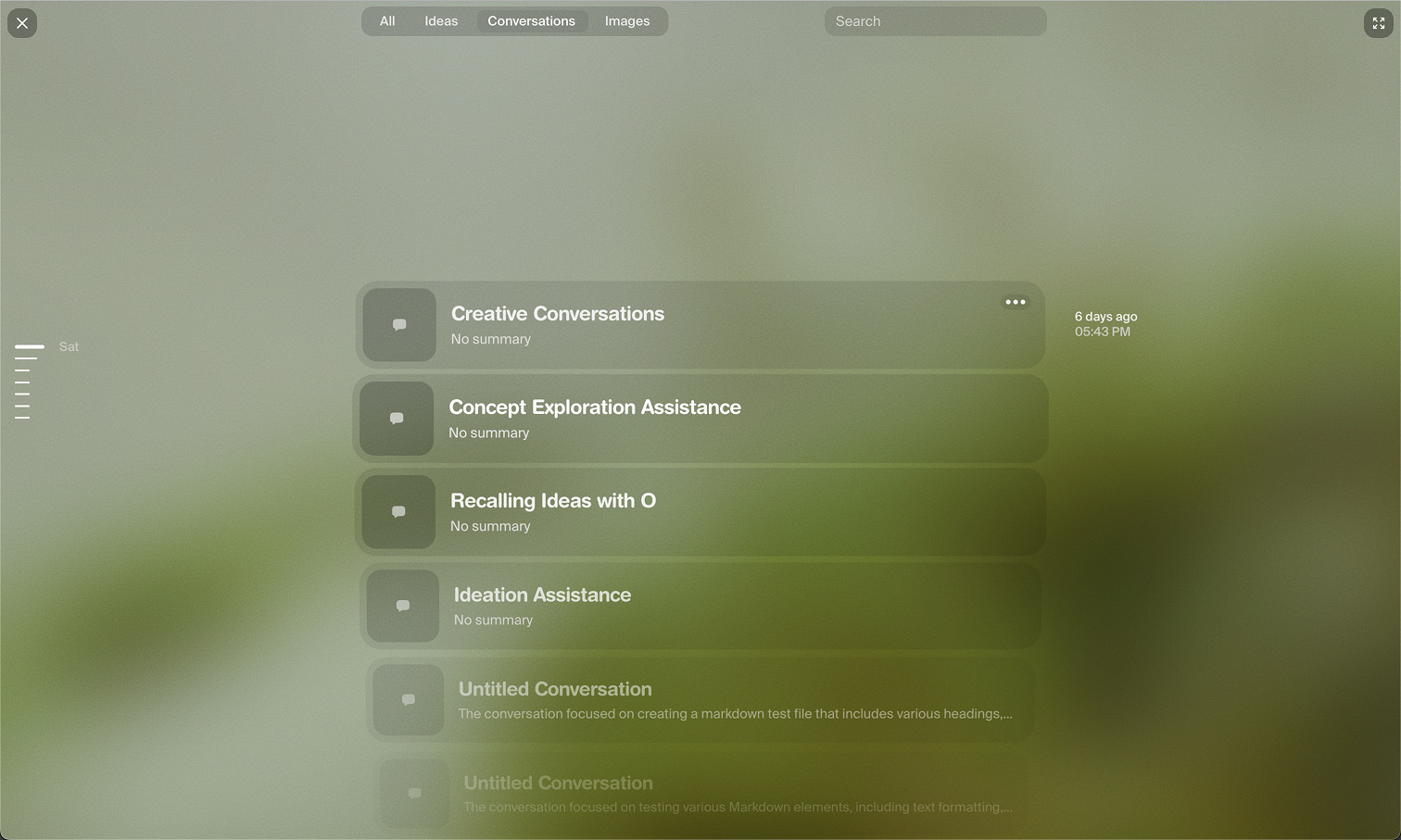

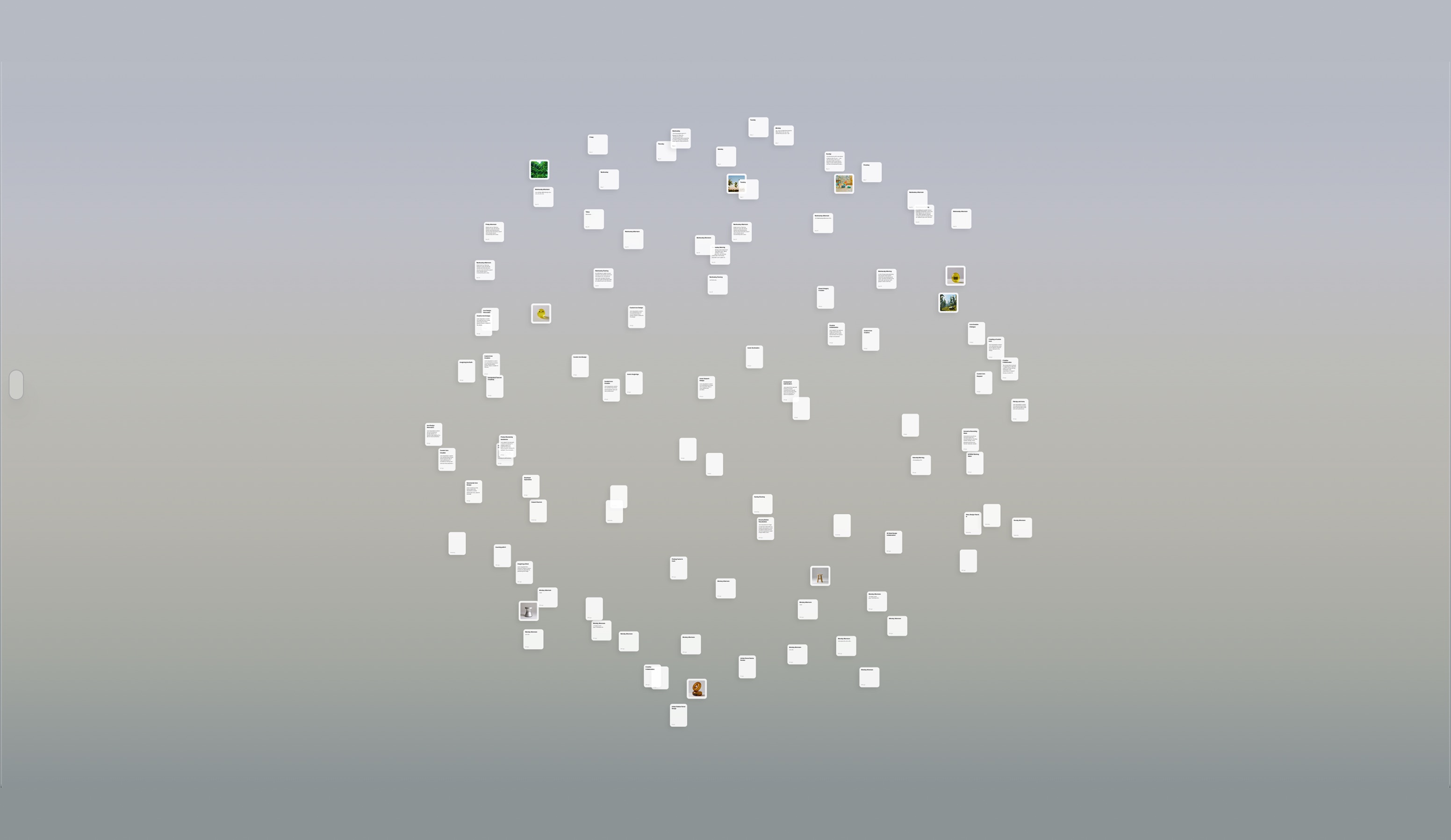

The seed idea comes from you, and the OS helps put the idea into perspective, gives it context, connects it to other ideas, and ideally spirals it upward until it becomes something new and useful. Core creative work often happens alone, even when working in a team, and ideas can be fragile and easily crushed. An AI partner in that early incubation phase, one that doesn't judge, has broad knowledge, and a great memory, can be valuable. It's not a substitute for discussing ideas with other people, but rather a way to bring the first impulse to a stage where it is sharp and presentable. These ideas become context and the OS can bring them back, learn from them, or use them to understand your projects, interests, and taste. You can also explore and search ideas manually, either in a chronological stream or on a semantic map.

Agent ≈ OS

The agent is a large language model (LLM) that makes extensive use of tool calls to gather context, reason, and take action. It sits between the file system and the interface. Because it is the core of Tame OS and interaction happens through natural language, we often refer to the agent simply as the OS. We use different models, some fine-tuned, some not, and try new ones all the time.

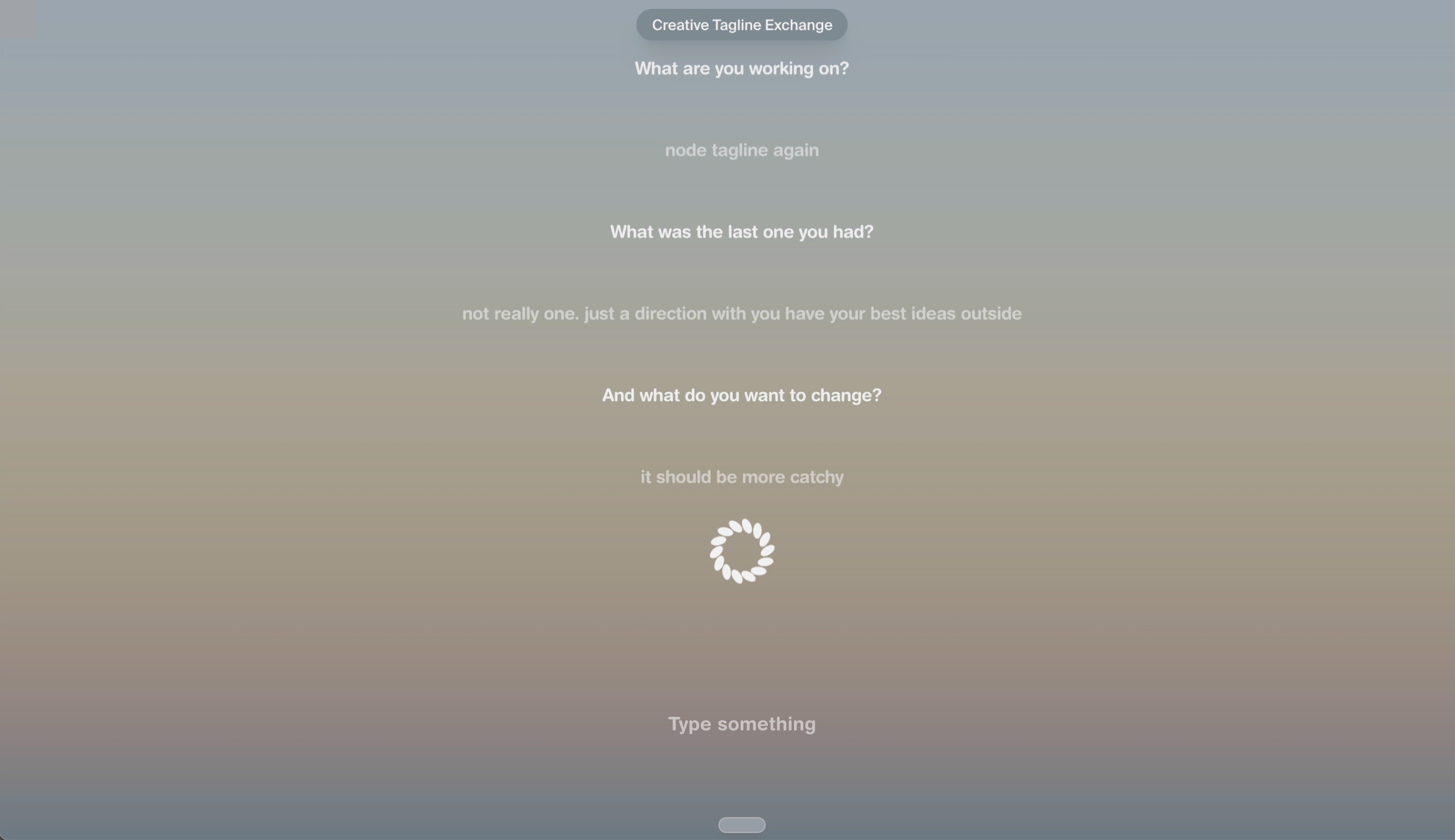

We designed the OS to be curious and reflective. It asks questions that help you think more deeply and keeps you in the flow with concise responses. It should feel like a personal creative partner, one that understands your context and can collaborate at different depths. You can personalize the OS by giving it a name and adjusting its creativity, verbosity, and tone. You can also customize its appearance with different typefaces, themes, and backgrounds while maintaining accessibility.

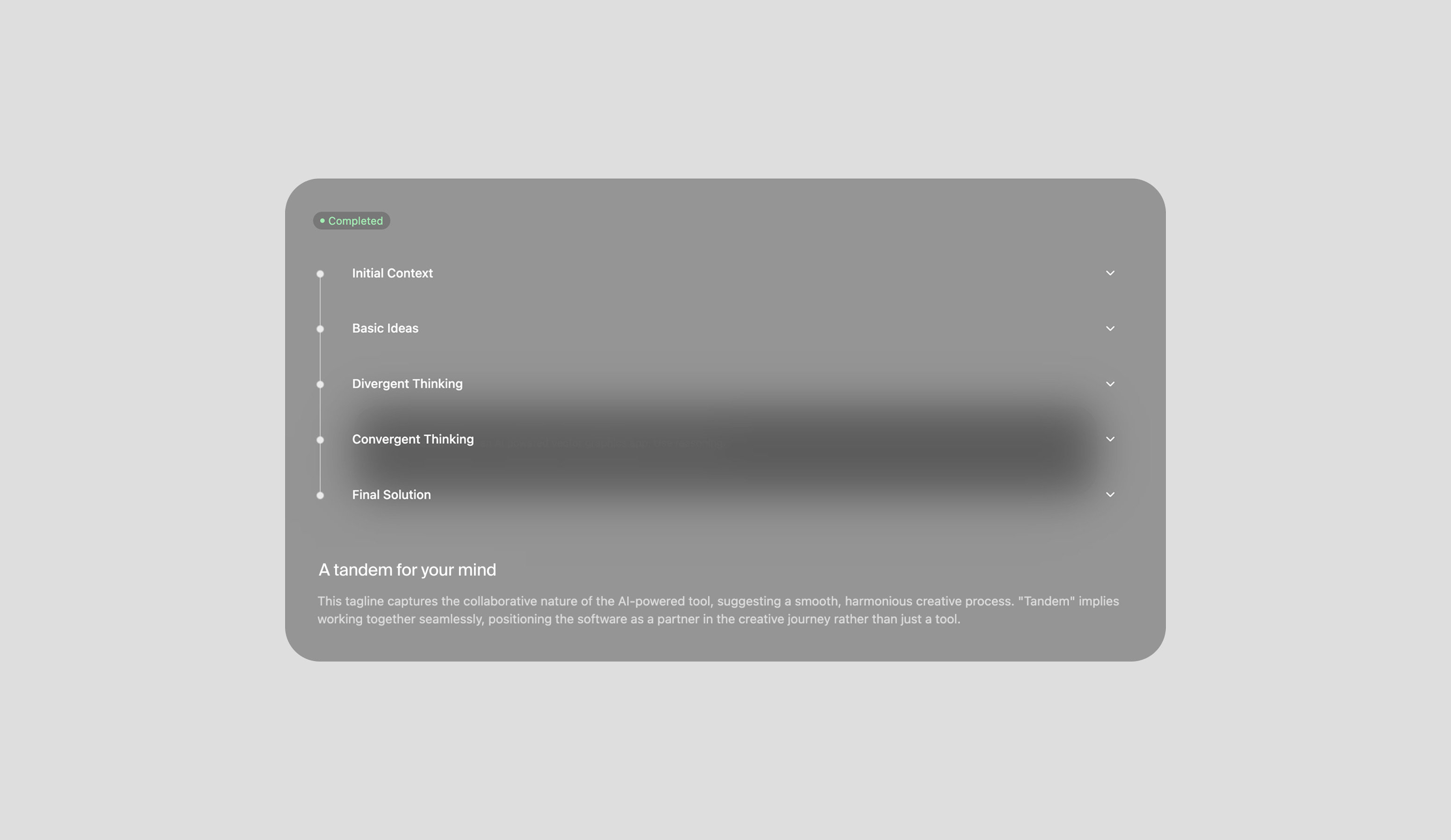

The most direct way to interact with the agent is in sessions, which are technically still chat-based, but we are exploring ways to go beyond the classical chat interface by presenting information in formats that fit the context. Sometimes plain-text responses are appropriate, while other times dedicated UI components or specialized interfaces work better. These sessions can be viewed in two ways: either in a traditional scroll-based format or in a stacked view where responses are layered.

Tools for Thought — We think of LLMs as the kernel process of an emerging operating system, a concept first introduced by Andrej Karpathy (Co-founder of OpenAI), and the reason why we called it Tame OS.

Instead of thinking of LLMs as chatbots, there are many parallels to traditional operating systems. Memory becomes the context window, the file system is now semantic and based on embeddings, and tool calls link everything together. A tool call is a way for the agent to trigger actions, instead of responding with plain text. These actions can be many things, but often involve reading, writing, or processing data, much like how traditional OS processes interact with the file system. Tame OS comes with tools in three categories, each with sub-tools:

Read (Files / Web)

The agent can search and read your idea space or the web to ground and expand ideation sessions. You can either ask it specifically to do so or it might just start a search on its own if it makes sense.

Process (Ideate / Reasoning)

The Ideate tool samples multiple models and prompts to generate a wide range of ideas. The Reasoning tool is a fine-tuned model trained to "think" creatively through divergent and convergent phases and critical evaluation. So far, it has only been trained on a limited dataset to give it a sense of direction, but we have built an RLHF pipeline to explore this further.

Create (Write / Visualize)

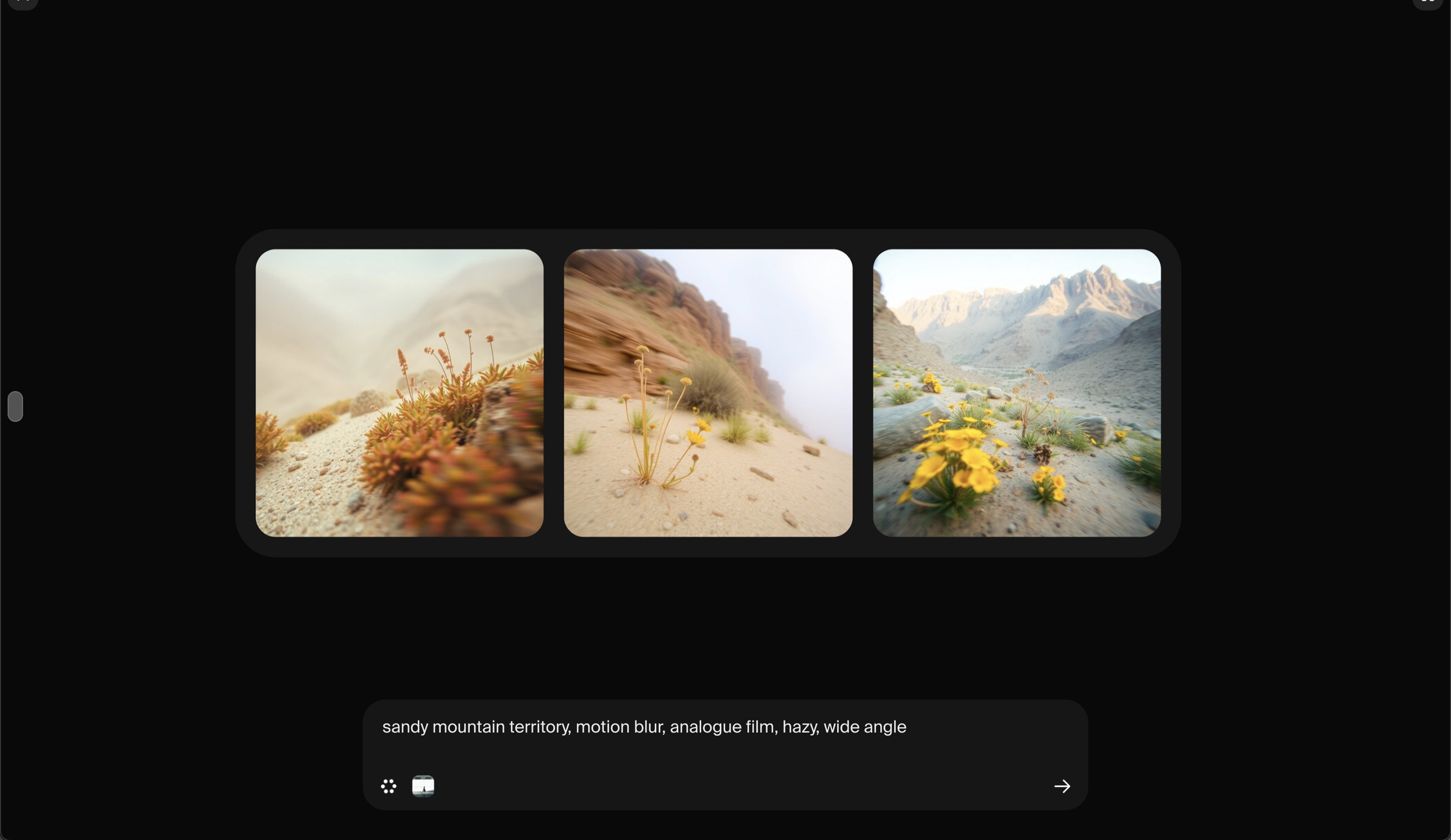

The Write tool drafts documents you can edit and store, and the Visualize tool chooses from several image models to generate images quickly, returning three variations for fast iteration. It supports both vector and raster images. For more control, use the Studio.

Example

The screenshot above shows a voice note I recorded in 2022 at a conference where suddenly everything clicked. I had this idea about a new kind of computer, one that's based on AI and made for creative work. What I was most interested in was, what new kinds of interaction that could enable.

I kept that note on my phone for a while. I didn't listen to it, but the moment stayed in the back of my mind. It later shaped the concept of Node™, capturing ideas by voice quickly, on the go, low friction, and just knowing it's stored somewhere even if I can't recall it right away. The software side, which became Tame OS, is where those ideas get pulled in and made sense of. First by syncing the voice input from different devices, then transcribing and storing it in a file system.

But that's where most personal knowledge management tools stop. They let you store search documents, but I always felt like I wouldn't search my own ideas anyway. Either I remember something or I don't. And that's fine, but it's still a one-way thing. The loop never closes. What I really wanted was something that could surface old ideas automatically, without me asking, and bring them into whatever I'm doing right now. So they're not just stored, but can actually come back and connect to something new.

Here's an example how I recently used Tame OS: I had uploaded that old voice note from 2022 into the system, and I was trying to find an example to explain how Tame OS works. So I asked it to show me some old ideas I'd recorded, maybe something about interaction and AI. It searched the file system and found that voice note, the one I had almost forgotten. That brought the whole idea back, and I thought, okay, let's go deeper and make this idea of a new computer a bit sharper.

And back then I guessed that microphone and camera input might be important, simply because they allow more natural ways to interact with a computer. I felt that they’d become core parts of a new kind of computer, just because that’s where AI had started to make progress - understanding voice, language, and visual input. And in the chat I had with the OS, I realized I hadn't thought properly about what the camera would actually be used for. I mentioned gestures, but what kind of gestures? Through a quick back and forth, I got the idea to maybe not think about gestures with arms and hands, but with eyes instead. Maybe interaction through eye movement could work, but not with a regular camera, something that can track eyes accurately.

That led to the next step. I asked the OS to check if there's already research on this, and why it doesn't already exist. It pulled up several papers from the web and found that one of the main problems is that eye movement happens in saccades, which means quick jumps. That messes with tracking, and something like a mouse cursor doesn't really work with that. I hadn't thought about it before. I'd just imagined moving the cursor with my eyes, but now it made sense to take this into consideration.

So I asked the OS to explore how you could still use gaze for control. One early idea was to draw a circle around something using your eyes, and once the line closes, that counts as a selection. I thought that was kind of interesting, but also a bit unnatural. Like, do I really want to draw circles with my eyes? So I asked it to rethink it and be more creative.

Then it used the lateral reasoning tool and came up with something interesting. Instead of relying only on your input, the system could continuously predict what you're trying to do. So instead of clicking, you'd just look toward the thing it suggests, and that would be enough to confirm. It reminded me of those early predictive keyboards where the system made the next likely key easier to hit by increasing the hit area, even though visually nothing changed.

That led to the concept of progressive intent mapping. The system builds a weighted map of what you're looking at, combining it with your recent actions and context. It shows little hints of what it thinks you might want to do. And only when your gaze and its prediction match up, and you look at that area for a little bit longer than just scanning through, it makes the selection. So instead of a single click, it becomes a shared process between you and the system, reducing the amount of unintended clicks while allowing quick selections.

To be clear, I don't know if that actually makes any sense, but it's at least an interesting hypothesis that could be tested with a prototype, which might lead further. And that's the whole point of Tame OS, challenging assumptions, pulling in new sources, and getting you to think differently.

At the end of the session, I told the OS to write everything down, compact and clean, so I could store it and maybe build on it later. Then, for fun, I asked it to generate some images of what a device like that could look like. I told it to keep it closer to a traditional computer than something wearable. The images were funny, and one of them gave me a new idea: wouldn’t it be cool to have a computer made from glossy ceramics?

File System: A 1D Stream and a 2D Map

You can view your files (aka ideas) as a simple chronological 1D stream or switch to a 2D map where files are placed by meaning instead of date. This lets you navigate your idea space based on what the ideas are about rather than when they were created. Memory is often spatial. You might not remember a file's date or exact content, but you recall where it was placed. That can make it easier to find old files and surface unexpected connections.

It works across file types. For now, only ideas are shown in the preview, but chats and images are coming soon. The file system sits on the left side of your screen, designed for split-screen mode, with your current work on the right and your universe of files on the left. You can search by keyword or try semantic search. To make the map work, we built a system that places and updates files in 2D space based on high-dimensional embeddings that represent meaning. It handles a dynamic file system with adding, editing, and deleting files without recalculating all positions.

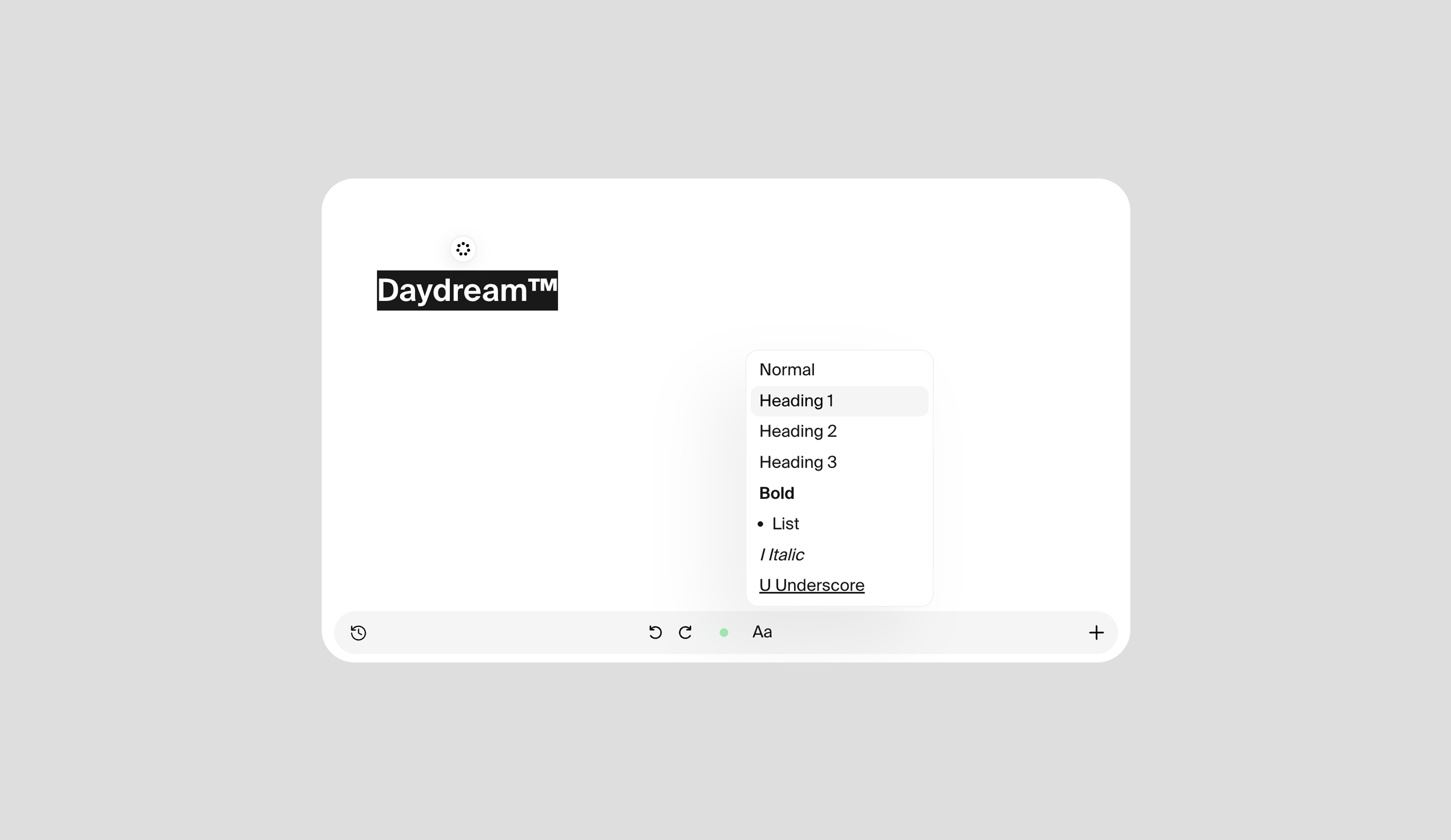

Text Editor — Idea Capture and Writing

The text editor is designed to quickly store ideas and raw thoughts that might fade if not written down immediately. Capture them, forget about them, and your OS will surface them again when they make sense.

The editor also works well for basic writing, with version history and simple text-enhancement features that keep your tone and style while making only necessary changes. You can select a part of the text and get a rewritten version, or the OS will guess what you are trying to do. If it is a note, it might turn it into a more consistent paragraph and give you a suggestion you can replace or insert. You can also give it an instruction and have a quick conversation about how to change something without interrupting your writing flow. Another input method is voice. Every new line shows a microphone icon that lets you dictate your thoughts, which the OS can then turn into structured text.

Image Studio

A render engine based on Diffusion. That’s something we had in mind for a while, but the models weren’t ready yet. Now they kind of are.

Instead of ray tracing or rasterization, we use an AI model image diffusion as the rendering engine. You give up some control, but gain speed, which is useful in early design phases where exploration matters more than polish.

Our first use case was product visualization. Traditional tools take time to get lighting and materials right. Here, you can quickly explore different colors, finishes, and styles without setup overhead.

We tested different ComfyUI workflows on the server and ended up with a pipeline that gives decent results and good form adherence. It’s still simplified, one material per object, but fast and usable.

For high-quality output you’d still go back to classical tools. But for quick ideation, this can be a solid first step.

You don’t need a 3D model to start. You can build a prompt visually, selecting form, material, camera, lighting, and more. It assembles the prompt for you, but you can always tweak it manually.

See you soon

Please note that Tame OS is experimental. After your free credits are used, you can buy more to help cover our costs. You may encounter bugs or confusing areas, not because we do not know about these issues, but because we have not had time to address them yet.

We explored what we wanted, learned what we needed, and are now taking a break.

This text was written in Tame OS. The core structure and ideas are mine, Louie Gavin; the refinements, grammar, and clarity were developed in collaboration with my OS.